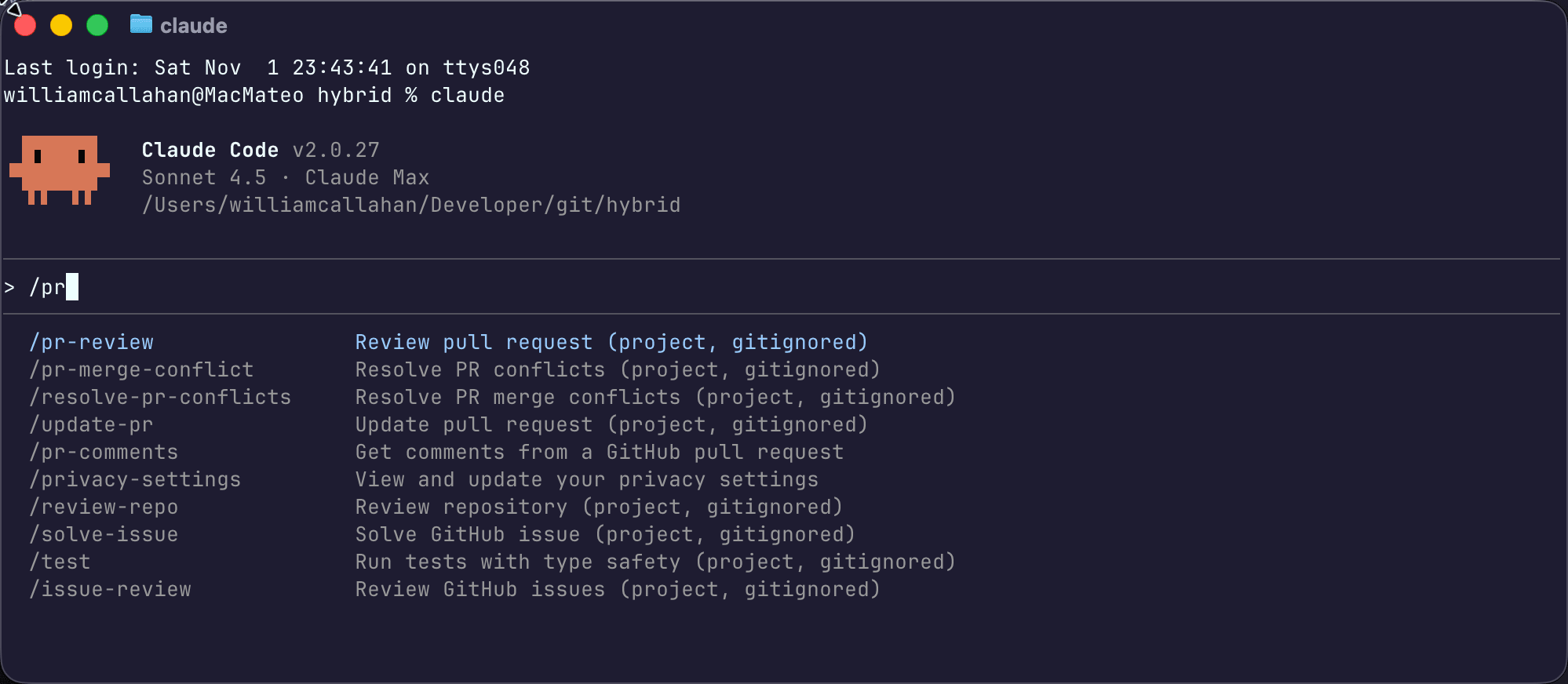

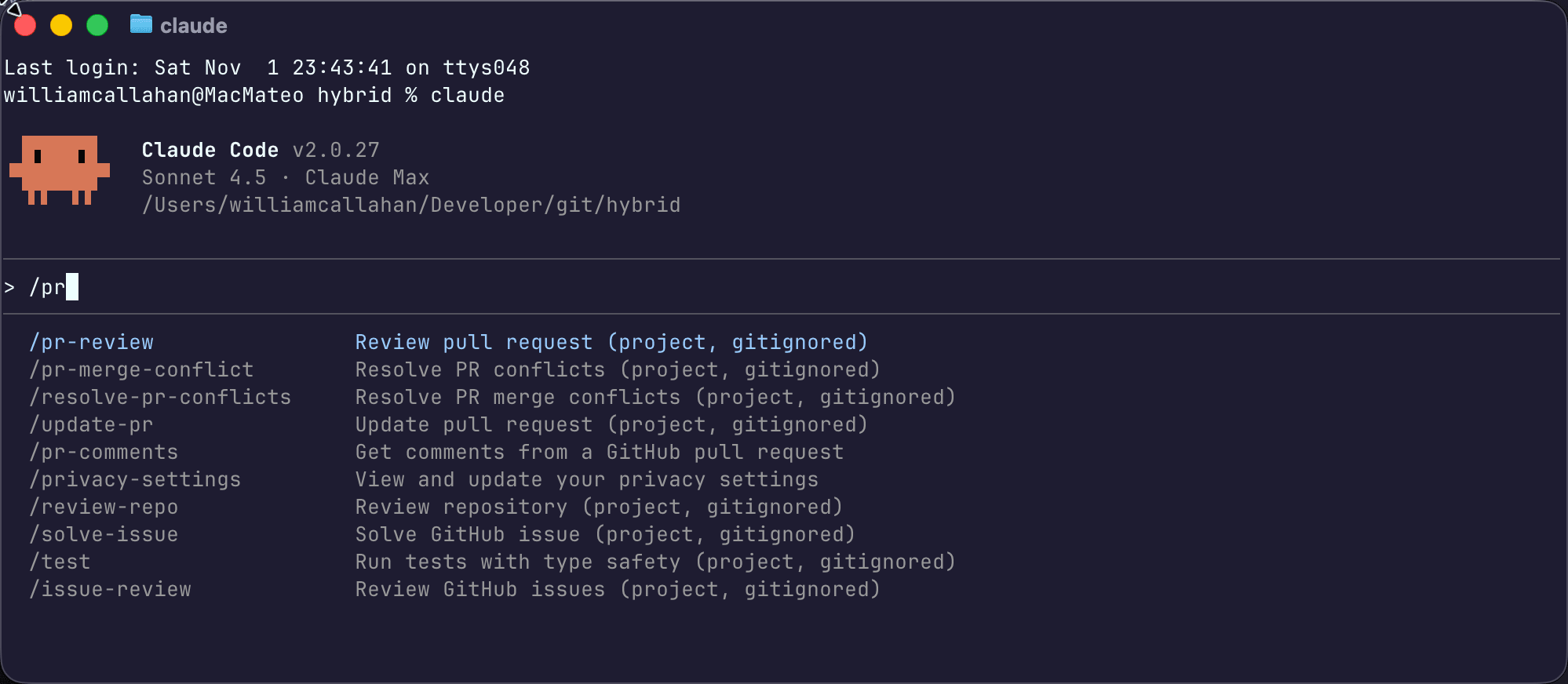

Claude Code: Automatic Linting, Error Analysis, & Custom Commands

Software engineer and founder with a background in finance and tech. Currently building aVenture.vc, a platform for researching private companies. Based in San Francisco.

Software engineer and founder with a background in finance and tech. Currently building aVenture.vc, a platform for researching private companies. Based in San Francisco.

Claude Code ships with a CLI that can run slash commands without opening the desktop client. This document records the small set of commands that keep a terminal workflow fast: run lint checks, hand stack traces to /analyze, and trigger project-specific helpers.

And while Claude Code has many tools and agentic behaviors of its own built in behind the scenes, commands are a good way to start with agentic behavior for coding.

Below I show how I've been customizing Claude Code.

Claude Code's built-in /analyze plus custom slash commands like /project:lint can do things like static analysis, error tracing, and other project-specific helpers directly in an agentic environment.

npm install -g @anthropic-ai/claude-code

claude --version

The CLI stores repo-scoped data in .claude/. Global settings live in ~/.claude/. Both folders are plain JSON/Markdown and can be committed (for project scope) or ignored (for personal scope).

Pipe build output into the CLI and ask for /analyze:

bun run test 2>&1 | claude -p "/analyze"

Claude returns a ranked list of suspected causes plus the files involved. Because the command runs in print mode, the response appears once and exits—useful for CI transcripts or terminal history.

.claude/commands/lint.md in the repository.Run `bun run lint`. Show the first command that fails and summarize the error.

/reload inside an interactive claude session or restart the CLI./lint.When the command executes, Claude prints the command it ran, surfaces stdout/stderr, and explains the next step. Keep the instruction short—Claude already has repository context.

Commands accept arguments appended after the name. Example: /lint apps/web. Inside the Markdown prompt, reference $ARGUMENTS to forward them:

Run `bun run lint $ARGUMENTS`. If the command fails, print the exit code and the relevant lines from the log.

Use this to target sub-packages or language-specific linters without creating multiple command files.

A few small commands cover most incidents:

| Command | Purpose |

|---|---|

/logs failing | Search structured logs under var/log/ and return the newest matches. |

/tests changed | Run tests for paths changed in the last commit: bun run test -- $ARGUMENTS. |

/fmt | Execute formatters (bun run biome:format in this repo). |

Keep commands deterministic. Avoid instructions that ask Claude to guess or invent fixes; treat the CLI as a wrapper around the tooling you already trust.

.claude/settings.local.json – per-repo defaults (selected model, chosen output style)..claude/commands/*.md – project commands (commit these when they codify workflow).~/.claude/commands/*.md – personal commands (do not commit).Check these files into source control only when everyone on the team needs them. Otherwise add .claude/commands to .gitignore and keep your local automation private.

Stash the minimal prompt for each frequent task alongside the command so you can regenerate it quickly:

<!-- .claude/commands/status.md -->

Run `git status --short`. Explain staged vs unstaged files. Do not run `git add`.

<!-- ~/.claude/commands/prune-branches.md -->

Delete all local branches merged into `main`. Show `git branch` output before removing anything.

When a command needs arguments, reference $ARGUMENTS directly instead of repeating separate Markdown files.

Common sequences benefit from a thin wrapper:

/commit → run git status, show staged diffs, prompt for a summary, then call git commit -m "..." using the supplied message. Keep the Markdown file focused on the order of operations and the allowed commit categories./push → ensure git status --short is clean, then push the current branch. Abort if the working tree is dirty./create-issue → run git diff and git status, summarise the change, then call @mcp**GitHub**create_issue with owner/repo preset.Claude follows the exact shell commands in the prompt, so list them in the order you expect. Avoid phrases like “do whatever is necessary.”

Drop this into .claude/commands/commit.md (adapt category names to your workflow):

Show `git status --short`. If nothing is staged, stop.

Run `git diff --cached`.

Ask the user for context, then generate a message in `type: subject` form (types: feat, fix, docs, refactor, test, chore, style).

Confirm the message before running `git commit -m`.

Display `git log -1 --stat` after committing.

This keeps every commit interactive but consistent.

@mcp…) should include rate-limit handling and always echo the command they attempted.--watch variant if the project uses one..claude/settings.local.json or the host application’s environment variable injection instead.Brief documentation inside the repository helps new contributors understand why the commands exist and where to extend them. Whenever the workflow changes, update the Markdown first so the CLI remains trustworthy.